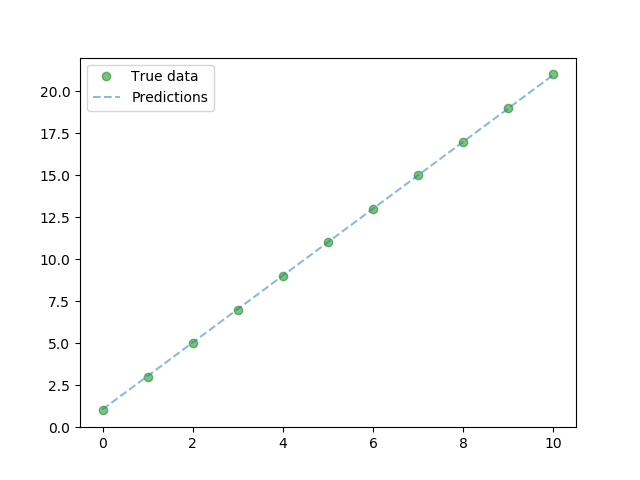

Linear Regression is an approach that tries to find a linear relationship between a dependent variable and an independent variable by minimizing the distance as shown below.

In this post, I’ll show how to implement a simple linear regression model using PyTorch.

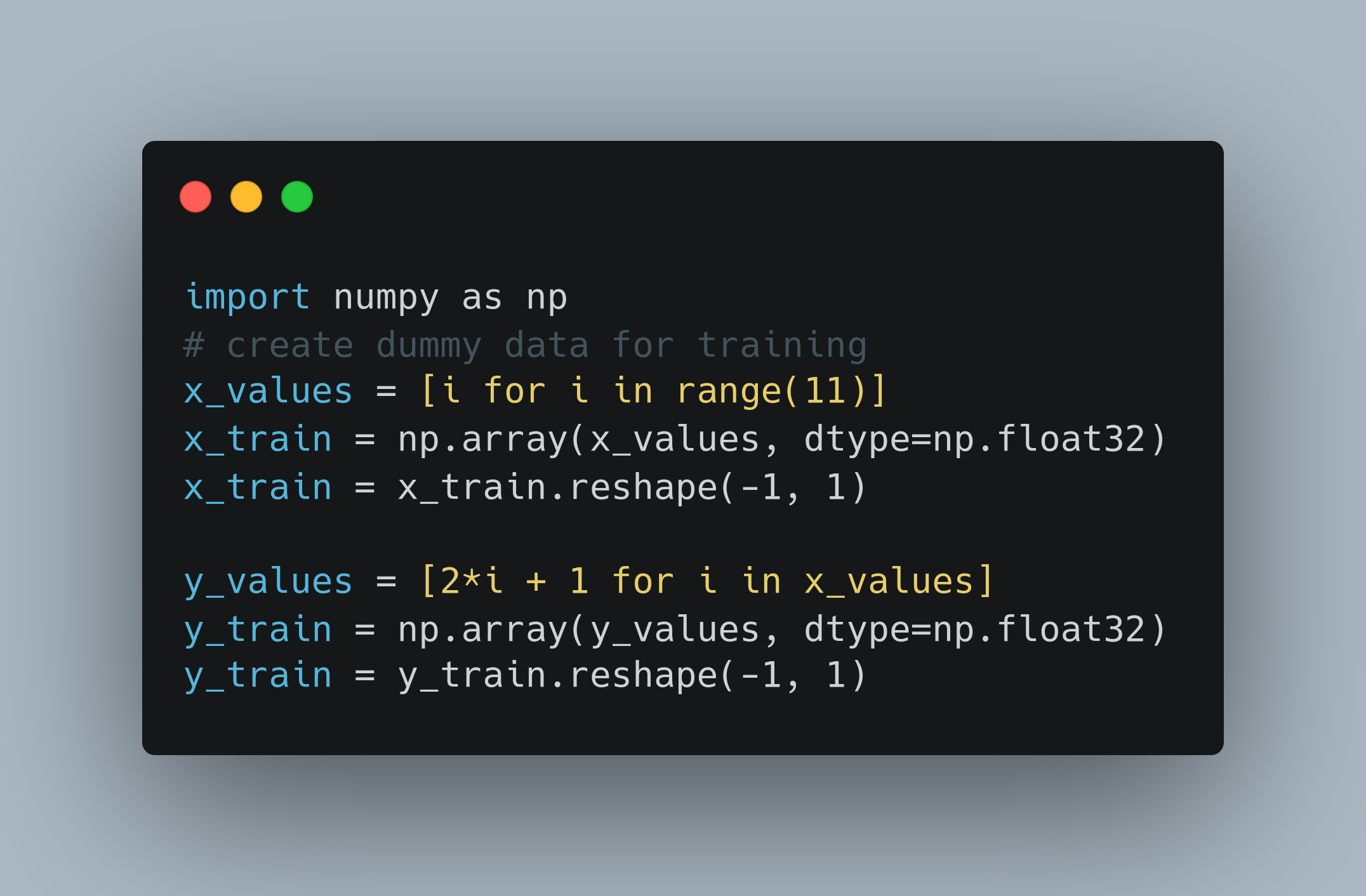

Let’s consider a very basic linear equation i.e., y=2x+1. Here, ‘x’ is the independent variable and y is the dependent variable. We’ll use this equation to create a dummy dataset which will be used to train this linear regression model. Following is the code for creating the dataset.

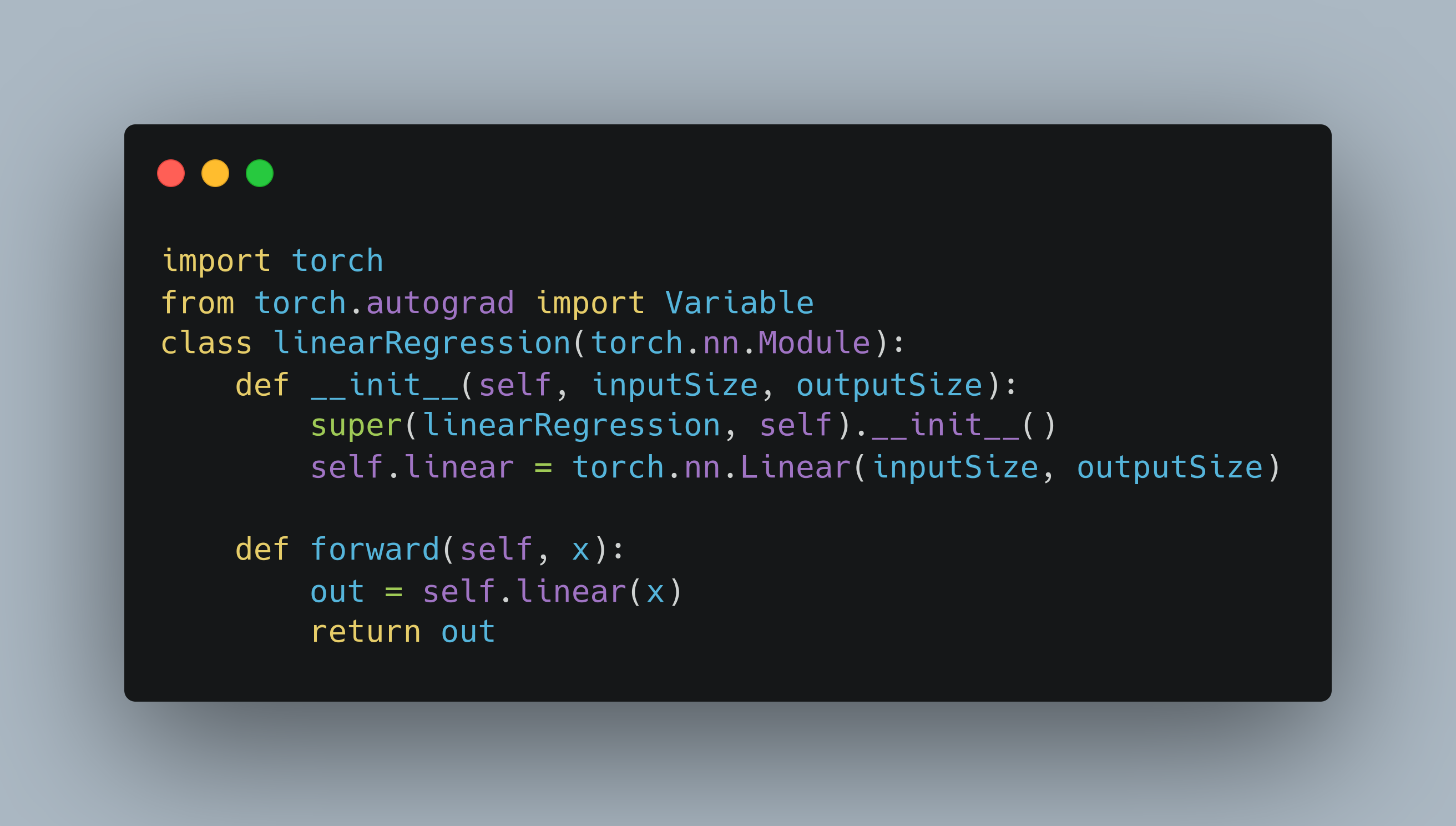

Once we have created the dataset, we can start writing the code for our model. First thing will be to define the model architecture. We do that using the following piece of code.

We defined a class for linear regression, that inherits torch.nn.Module which is the basic Neural Network module containing all the required functions. Our Linear Regression model only contains one simple linear function.

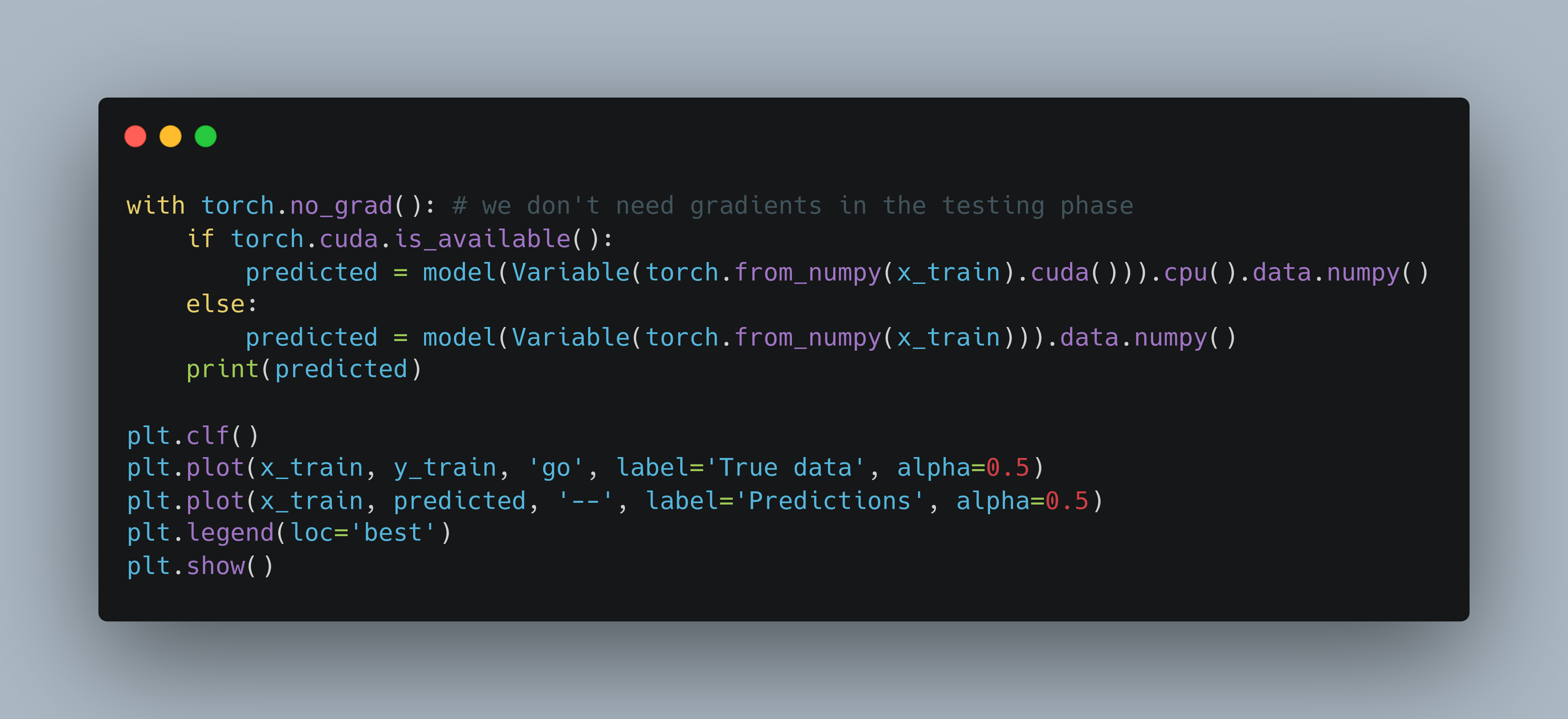

Next, we instantiate the model using the following code.

After that, we initialize the loss (Mean Squared Error) and optimization (Stochastic Gradient Descent) functions that we’ll use in the training of this model.